Who Wrote This? Columbia Engineers Discover Novel Method to Identify AI-generated Text

Columbia Engineering researchers develop a novel approach that can detect AI-generated content without needing access to the AI's architecture, algorithms, or training data–a first in the field.

Media Contact

Holly Evarts, Director of Strategic Communications and Media Relations 347-453-7408 (c) | 212-854-3206 (o) | [email protected]

About the Study

CONFERENCE: International Conference on Learning Representations (ICLR) in Vienna, Austria, May 7-11, 2024.

TITLE: “Raidar (geneRative AI Detection viA Rewriting)”

AUTHORS: Chengzhi Mao, Carl Vondrick, and Junfeng Yang from Columbia Engineering; Hao Wang from Rutgers University.

FUNDING: This work is in part funded by a grant from the School of Engineering and Applied Science - Knight First Amendment Institute (SEAS-KFAI) Generative AI and Public Discourse Research Program.

Computer scientists at Columbia Engineering have developed a transformative method for detecting AI-generated text. Their findings promise to revolutionize how we authenticate digital content, addressing mounting concerns surrounding large language models (LLMs), digital integrity, misinformation, and trust.

Computer scientists at Columbia Engineering have developed a transformative method for detecting AI-generated text. Their findings promise to revolutionize how we authenticate digital content, addressing mounting concerns surrounding large language models (LLMs), digital integrity, misinformation, and trust.

Innovative approach to detecting of AI-generated text

Computer Science Professors Junfeng Yang and Carl Vondrick spearheaded the development of Raidar (geneRative AI Detection viA Rewriting), which introduces an innovative approach for identifying whether text has been written by a human or generated by AI or LLMs like ChatGPT, without needing access to a model’s internal workings. The paper, which includes open-sourced code and datasets, will be presented at the International Conference on Learning Representations (ICLR) in Vienna, Austria, May 7-11, 2024.

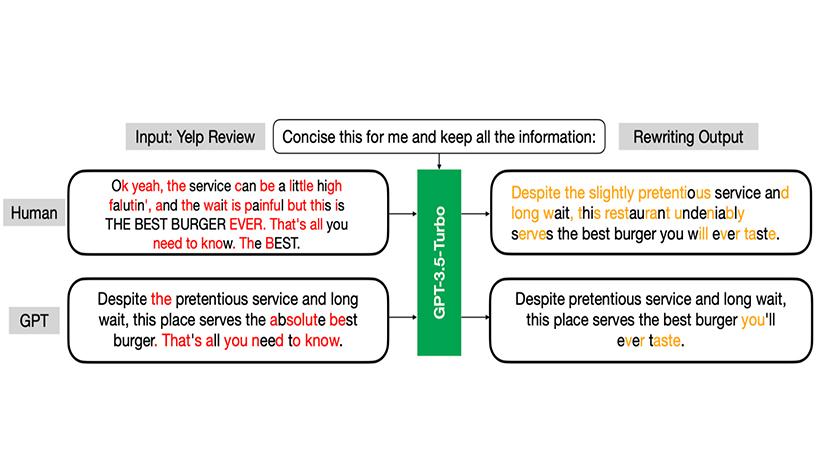

The researchers leveraged a unique characteristic of LLMs that they term “stubbornness”—LLMs show a tendency to alter human-written text more readily than AI-generated text. This occurs because LLMs often regard AI-generated text as already optimal and thus make minimal changes. The new approach, Raidar, uses a language model to rephrase or alter a given text and then measures how many edits the system makes to the given text. Raidar receives a piece of text, such as a social media post, product review, or blog post, and then prompts an LLM to rewrite it. The LLM replies with the rewritten text, and Raidar compares the original text with the rewritten text to measure modifications. Many edits mean the text is likely written by humans, while fewer modifications mean the text is likely machine-generated.

Raidar detects machine-generated text by calculating rewriting modifications. This illustration shows the character deletion in red and the character insertion in orange. Human-generated text tends to trigger more modifications than machine-generated text when asked to be rewritten. Credit: Yang and Vondrick labs

Raidar's remarkable accuracy is noteworthy -- it surpasses previous methods by up to 29%. This leap in performance is achieved using state-of-the-art LLMs to rewrite the input, without needing access to the AI's architecture, algorithms, or training data—a first in the field of AI-generated text detection.

Raidar is also highly accurate even on short texts or snippets. This is a significant breakthrough as prior techniques have required long texts to have good accuracy. Discerning accuracy and detecting misinformation is especially crucial in today's online environment, where brief messages, such as social media posts or internet comments, play a pivotal role in information dissemination and can have a profound impact on public opinion and discourse.

Authenticating digital content

In an era where AI's capabilities continue to expand, the ability to distinguish between human and machine-generated content is critical for upholding integrity and trust across digital platforms. From social media to news articles, academic essays to online reviews, Raidar promises to be a powerful tool in combating the spread of misinformation and ensuring the credibility of digital information.

"Our method's ability to accurately detect AI-generated content fills a crucial gap in current technology," said the paper’s lead author Chengzhi Mao, who is a former PhD student at Columbia Engineering and current postdoc of Yang and Vondrick. "It's not just exciting; it's essential for anyone who values the integrity of digital content and the societal implications of AI's expanding capabilities."

What’s next

The team plans to broaden its investigation to encompass various text domains, including multilingual content and various programming languages. They are also exploring the detection of machine-generated images, videos, and audio, aiming to develop comprehensive tools for identifying AI-generated content across multiple media types.

The team is working with Columbia Technology Ventures and has filed a provisional patent application.